One of my main sources of inspiration is when I believe others are doing something wrong. Recently, StarCityGames has been publishing an article series called Limiting Chance, and it’s presented as a bunch of numbers related to a situation and some graphs to go along with it.

The probability behind almost everything in Magic is pretty simple, and can be done almost entirely using just your intuition and two mathematical concepts: the hypergeometric distribution and Bayes’s theorem. The purpose of this article is not to feed you the results for a very specific case, but instead to equip you with the knowledge to solve the situations you think warrant attention and are worthy of further study. If I were doing this work for myself, I would use R or MATLAB thanks to their power, but in order to increase the accessibility of this article, I’ll be doing everything using just standard functions in Excel (and here’s a link to download the spreadsheets used).

In Part 1 of this series, we’ll cover the hypergeometric distribution in some detail followed, by Part 2 covering Bayes’s Theorem, Part 3 combining the two, and (perhaps) Part 4 covering more advanced methods.

Notation

Before I get into the math, it’s necessary to define some notation that’s going to come up a lot in this article.

n!– This is said as “n factorial” and is equal to the product of all integers equal to or less than n: n!=n × (n-1) × (n-2) × . . . × (2) × (1). By definition, 0! = 1.

– This is said as “n choose k” and is the number of combinations (without taking order into account) possible when taking k items from a set of size n. It is also known as the binomial coefficient and can be expressed as

– This is said as “n choose k” and is the number of combinations (without taking order into account) possible when taking k items from a set of size n. It is also known as the binomial coefficient and can be expressed as  for all 0 ≤ k ≤ n (and is 0 when k > n). For example,

for all 0 ≤ k ≤ n (and is 0 when k > n). For example,  .

.

P(x) – This represents the probability of event x occurring, where 0 ≤ P(X) ≤ 1 (if you want to convert a probability into a percent, just multiply by 100%). For example, P(I am in the US on 6 October 2012) = 0.

∩ -This is the symbol for the intersection of two sets, but it is essentially equivalent to saying “and”. If we want to express the probability of two events occurring, let’s say “Chris travels” and “Chris gets dumped”, it would be P(Chris travels ∩ Chris gets dumped) [which is equal to 1]. If two events, A and B, are independent (that is, the outcome of one does not depend on the other), P(A∩B)=P(A)*P(B).

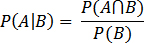

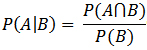

P(A|B) – This is known as a conditional probability, and it is the probability of event A given that event B occurs and is defined as:

If we want to find the probability of drawing a land given that we kept a one-land hand, it would be the probability of both drawing a land and our original seven-card hand containing only one land divided by the probability of our original hand containing one land. If events A and B are independent, P(A|B) = P(A).

The Hypergeometric Distribution

The Hypergeometric distribution is a discrete distribution that is most frequently used when dealing with selecting a sample from a population without replacement (which is what we’ll be doing the majority of the time and, if people want it, cases where we are not can be addressed in future articles).

The Probability Mass Function

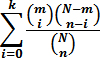

The probability mass function (pmf) of the distribution is given by:

Where:

N is the size of the population (the size of the deck for our case)

m is how many successes are possible within the population (if you’re looking to draw lands, this would be the number of lands in the deck)

n is the size of the sample (how many cards we’re drawing)

k is how many successes we desire (if we’re looking to draw three lands, k=3)

For the rest of this article, “pmf(x, n)”, will be the pmf of the scenario we’re talking about with k=x and n=n.

A Simple Example

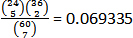

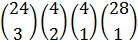

Let’s say we have a sixty-card deck with twenty-four lands and we want to determine how many seven-card hands will have exactly five lands in them. We would plug the following numbers into our hypergeometric pmf:

N = 60

m = 24

n = 7

k = 5

Which gives us:

Rather than doing this by hand, a much easier way to do this is to use the HYPGEOM.DIST function in Excel with the inputs as (k, n, m, N). The “hyp pmf” tab in the attached spreadsheet provides an easy way of using the function as you can see here (for all spreadsheet tabs included, a green background indicates those are the fields to be edited while a red background indicates a result):

| Deck Size | 60 |

| Number of draws | 7 |

| Number of successes in deck | 24 |

| Successes desired | 5 |

| Result | 0.069335 |

Exercise 1 (answers to all exercises are at the bottom of the article): What is the probability of drawing two copies of a card you have four of after seeing ten cards in a sixty-card deck?

Expected Value

The expected value is simply  , so if a sixty-card deck has twenty-four lands, we have 7 × 24 ÷ 60=2.8 lands in an average seven-card hand.

, so if a sixty-card deck has twenty-four lands, we have 7 × 24 ÷ 60=2.8 lands in an average seven-card hand.

The Cumulative Distribution Function

Instead of wanting to know how often something occurs for a specific value, it is often helpful to instead find the probability for a range of occurrences. Let’s say we have a binary condition for our mulligan decisions: We keep all hands with two, three, or four lands, but we mulligan any hand that does not meet this standard. We could simply add the pmfs for the cases, where k=2, 3, and 4, but we have a more powerful tool available to us: the cumulative distribution function (cdf), which gives us the cumulative probability of having k or fewer successes and is expressed as:

For the rest of this article, “cdf(x)”, will be the cdf of the scenario we’re talking with x = k. For example, cdf(5) here would equal 0.986558, which means there’s a 98.66% chance of having five or fewer lands in your opening seven given this deck configuration.

cdf Example

In order to find the probability of having a keepable hand in the situation presented above (where we only keep hands with two to four lands), we would take cdf(4) [which includes all hands with four or fewer lands] and subtract cdf(1) [which removes all hands with zero or one lands] from it, giving us 0.774567.

The spreadsheet “hyp cdf” takes the same inputs as “hyp pmf”, but it allows you to input a range of values (although the formula in the spreadsheet is implemented as cdf(upper bound) − cdf(lower bound) + pmf(lower bound) to make the case where 0 is the lower bound work).

| Deck Size | 60 |

| Number of draws | 7 |

| Number of successes | 24 |

| Successes desired between | 2 |

| and | 4 |

| Result | 0.774567 |

Exercise 2: What’s the probability of drawing at least one copy of your four-of in a sixty-card deck after drawing twelve cards?

Imposing multiple conditions

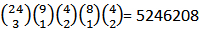

A more nuanced way to look at the hypergeometric pmf is that the denominator is the count of all possible combinations, while the numerator is the count of combinations we consider successes. The number of total combinations is quite simple: It’s the number of ways to pick n cards from a deck of size N, therefore the total number of possible combinations is just  .

.

The numerator is a bit trickier to understand, so we’ll go over it using examples. For these examples, we’ll assume a deck size of sixty containing twenty-four Swamps and four copies of nine unique cards (cards A, B, C, D, E, F, G, H, and I). If our numerator is:

The first number (“24 choose 5”) is the number of combinations of five items from a group of twenty-four, which is then multiplied by the number of combinations containing two items from a group of thirty-six. This represents the number of hands with exactly five lands in them.

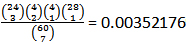

Let’s say we want a hand containing exactly three lands, exactly two copies of card A, exactly one copy of card B, and the last card can be anything else:

This is the number of combinations containing three lands multiplied by the number of combinations containing two of card A multiplied by the number of combinations containing one of card B multiplied by the number of combinations having the last card be anything else. To find the probability of this case occurring, we simply take the number we calculated above and divide it by the total number of combinations, which gives us:

What we’ve just done, using nothing but our own intuition, is convert the hypergeometric pmf to a multivariate hypergeometric pmf. Included in the spreadsheet you downloaded earlier is a tab named “Multiple Conditions,” which you can use to help you work along with what’s been done in this section.

| Deck Size | 60 |

| Number of draws | 7 |

| Number of successes of case 1 | 24 |

| Successes of case 1 desired | 3 |

| Number of successes of case 2 | 4 |

| Successes of case 2 desired | 2 |

| Number of successes of case 3 | 4 |

| Successes of case 3 desired | 1 |

| Number of successes of case 4 | 28 |

| Successes of case 4 desired | 1 |

| Number of successes of case 5 | |

| Successes of case 5 desired | |

| Result | 0.00352176 |

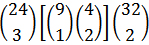

I’ll go through one more example before leaving some exercises for the reader. Let’s say we want a hand containing exactly three lands, exactly two copies of any card (besides a land), and any two other cards:

The first number is, once again, the number of combinations possible for three lands. This is multiplied by the term in brackets, which is how many cards we can pick to have a pair (9) “choose” how many pairs we want (1), which is multiplied by how many combinations of a specific card exist (“4 choose 2”), which is then multiplied by the number of combinations containing two cards from what’s left. We can use the spreadsheet to do this one as well:

| Deck Size | 60 |

| Number of draws | 7 |

| Number of successes of case 1 | 24 |

| Successes of case 1 desired | 3 |

| Number of successes of case 2 | 4 |

| Successes of case 2 desired | 2 |

| Number of successes of case 3 | 32 |

| Successes of case 3 desired | 2 |

| Number of successes of case 4 | |

| Successes of case 4 desired | |

| Number of successes of case 5 | |

| Successes of case 5 desired | |

| Result | 0.015596365 |

| Multiply by? | 9 |

| Result | 0.140367283 |

Exercise 3: How many combinations are there for exactly three lands and two pairs of any card in the deck?

Exercise 4: What’s the probability of having at least two lands, two of card A, one of card B, with the remaining two cards being anything but card A or B? NB: This is now a multivariate cdf.

Conditional Probability

The last item about the hypergeometric distribution (which is also conveniently an excellent segue into the next topic) is on how to handle conditional probabilities. We defined what conditional probability was above, but it’s defined as:

We’ll take our example for this section from the article I maligned in the introduction to show how easy it is to solve a specific case if we work from general principles (rather than write some obfuscated C code to solve one particular problem). The question presented is: What’s the chance of drawing a land on the first turn given you draw a starting hand with three or six lands?

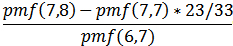

In this scenario, we start with forty cards, seventeen of which are lands. Our first step is to figure out what events A and B represent: A is drawing a land on the first turn of the game, while B is having a starting hand with three or six lands. Once we figure this out, we can apply what we’ve learned earlier here. For the case in which we start with a six-land hand:

There are two ways to do this. We can make indirect usage of the formula above and change how we see our population by removing seven cards: six lands and one nonland (giving us thirty-three cards to draw from with eleven lands) and get 11 ÷ 33 or we can make direct use of the formula above and use:

NB: Where the numerator came from is left as an exercise to the reader.

In the case where there are three lands in the starting hand, we can just use pmf(1,1) with N=33 and m=14, giving us 0.424242.

Now that we have that, we can continue with the next exercise in the article, which is finding the percent of the time you draw three spells in the first four turns. We can just use pmf(3,4) with different Ns and ms to generate these results as well. Here’s our table:

| Deck Size | 33 | 33 | |

| Number of draws | 4 | 4 | |

| Successes desired | 3 | 4 | |

| Number of successes | 18 | 18 | |

| Result 2 lands | 0.29912 | 0.07478 | 0.3739 |

| Number of successes | 19 | 19 | |

| Result 3 lands | 0.331525 | 0.094721 | 0.426246 |

| Number of successes | 20 | 20 | |

| Result 4 lands | 0.36217 | 0.118402 | 0.480572 |

| Number of successes | 21 | 21 | |

| Result 5 lands | 0.390029 | 0.146261 | 0.53629 |

| Number of successes | 22 | 22 | |

| Result 6 lands | 0.413978 | 0.178763 | 0.592742 |

| Number of successes | 23 | 23 | |

| Result 7 lands | 0.432796 | 0.216398 | 0.649194 |

I’ll leave the two cases about “spell advantage” as an exercise to the reader, but if enough people want it worked out, I’ll do it in a blog post or something. We’ll cover conditional probability in much more depth when we go over Bayes’s Theorem.

Chris Mascioli

@dieplstks on Twitter

Exercise Answers

- 0.113046

- 0.600972

- 0.010665